AI/ML (artificial intelligence / machine learning) workloads in datacenter applications are increasingly being offloaded to flexible FPGA-based subsystems. These FPGA-based AI/ML offload engines are more power and compute efficient compared to GPU-based systems.

Offload engines are special-purpose hardware platforms for very specific computational needs. In datacenters, offload engines are increasingly being deployed to speed AI/ML applications. Cloud computing has naturally enabled aggregation of large datasets. The adoption of AI and ML techniques to speed analyses of data or to look of novel applications of existing data continues to accelerate.

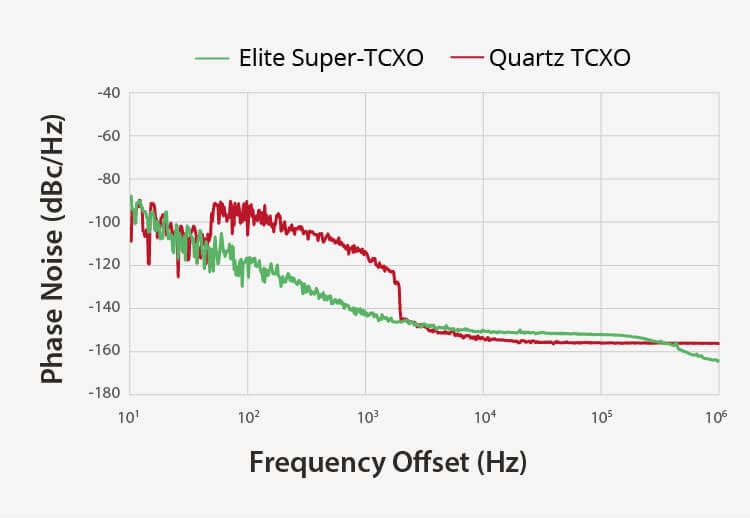

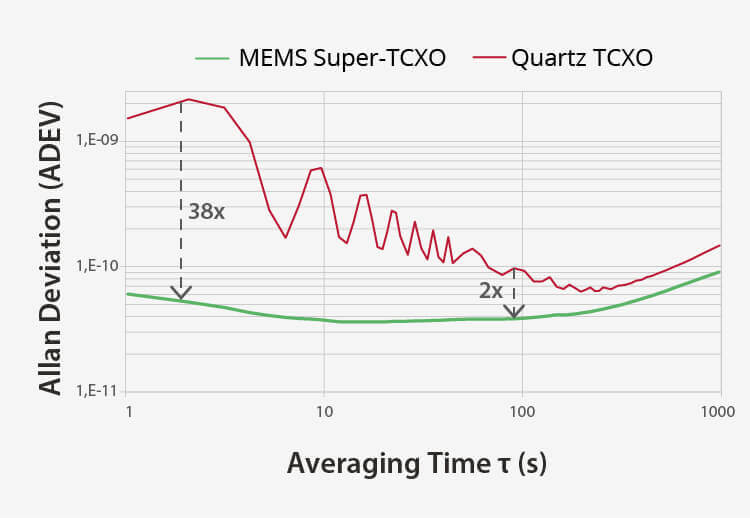

SiTime network synchronizer products, along with precision TCXOs and OCXOs, are key technology enablers for precise timekeeping in datacenters that deploy AI/ML offload engines.